The making of Allô Papa Noël

In the realm of telecommunications, creating memorable and engaging experiences for customers has become a hallmark of innovation. Bouygues Telecom, a leading player in the industry, in collaboration with the renowned advertising agency BETC, embarked on an ambitious project to redefine holiday interactions. This initiative, rooted in the spirit of Christmas, sought to leverage cutting-edge GenAI technology to offer something truly magical: direct conversations with Santa Claus via WhatsApp.

Architecting

AI for Millions

Since 2019, Bouygues Telecom, with BETC's creative prowess, has consistently delivered captivating holiday experiences. In 2024, they took it a step further by integrating real-time voice interactions, personalized videos, and enchanting Christmas tales, all seamlessly delivered through a sophisticated chatbot. This project stands as a testament to how simple yet powerful ideas, supported by advanced technology, can enhance brand platforms and deliver unparalleled user experiences.

In this article, we delve into the technical intricacies of developing this AI-powered chatbot, exploring the challenges faced, the innovative solutions implemented, and the remarkable outcomes achieved. Join us as we uncover the magic behind bringing Santa Claus to life in the digital age.

Objectives

- Serve 2 million users over one month with minimal latency.

- Handle traffic spikes during peak times, such as evenings, weekends & Christmas Day.

- Moderate user messages to prevent misuse or inappropriate content.

- Optimize execution costs.

Pipelines

From the initial design stages, it became clear that the application’s primary role would involve receiving inputs (text, audio), processing them through various tools, and producing outputs (text, audio, video). A microservices pipeline architecture was chosen as the appropriate solution to meet these needs.

With anticipated traffic of at least 250k messages per hour, we needed a high-throughput, reliable message reception system.

Incoming messages are sent by Vonage to a webhook connected to a lightweight AWS Lambda function that verifies integrity and transmits messages to an SQS FIFO queue. A separate Lambda function, which is the core of the application, processes messages in batches of 10.

Benefits of Using SQS FIFO:

- High-throughput reception without loss.

- Throttle processing if the application is overloaded.

- Orderly processing of messages per user.

- Parallel processing of up to 10 messages.

- Automatic retry in case of processing failure..

Lambda Function Benefits:

- Automatic scaling within seconds.

- Batch processing using asynchronous JavaScript ensures smooth handling of other messages even when one is awaiting an external service response (database, API, etc.).

Moderation

To maintain the wholesome Christmas spirit, user inputs undergo a two-step validation process:

First step: Rapidly exclude obviously inappropriate content at low cost and latency using a prohibited words search. A fuzzy search method automatically includes similar variants (plural, gendered forms, etc.).

Second step: The LLM performs a global classification of the phrase. The LLM’s strength lies in detecting subtle contextual issues involving allowed words used in inappropriate contexts.

Personalized Video

The purpose of this pipeline is to create a video featuring Santa Claus delivering a user-provided message. The video includes natural lip movements synchronized with the voice and, optionally, a customized AI-generated background image. Users are limited to providing a short text message, ensuring that the resulting video does not exceed 30 seconds. Our target was to generate this video within a maximum time of 60 seconds.

To achieve this, we used the Eleven Labs API for voice generation and Stable Diffusion for background creation. However, no available API met our needs for synchronizing the actor's lip movements with the audio. After testing several options, we found that the Wav2Lip model produced satisfactory results.

The main challenge with Wav2Lip was that it came as a raw PyTorch model, which did not perform well for CPU-based inference. We needed to execute it in a GPU-enabled Python environment, which was incompatible with the rest of our serverless Node.js application. To address this, we built a video processing cluster using AWS EC2 g4dn instances equipped with GPUs.

Precomputation Optimization

The Wav2Lip model came with a functional script to generate synchronized videos from an input video and audio. However, our tests revealed that generating a typical 30-second video took around 120 seconds, which exceeded our performance target.

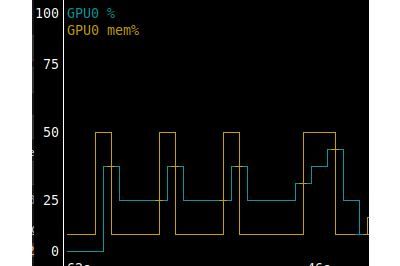

To improve efficiency, we analyzed the process, which consists of multiple alternating steps between CPU (orange) and GPU (green) tasks:

After measuring the execution time of each step, it became clear that the slowest tasks were, in order:

- Detect actor face

- Lips sync inference

- Crop & blend mouth

- Merge final video output

Up to the "Crop actor mouth" step, previous ones always produced the same results, so we decided to precompute these steps. The remaining steps could not be precomputed as they depended on the dynamic, user-provided inputs. Additionally, we moved the final video encoding process to the GPU, using the h264_nvenc encoder with ffmpeg to reduce CPU load.

Parallelism Optimization

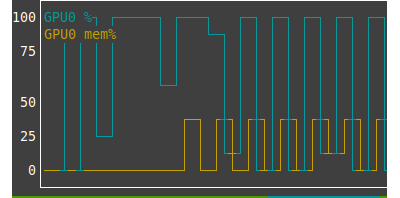

Running the optimized pipeline revealed significant alternation between CPU and GPU activities, with the GPU rarely reaching full utilization despite being a costly resource.

To address this, we experimented with parallel processing, allowing multiple videos to be processed simultaneously. This approach ensured that the GPU was consistently utilized, maximizing its usage.

Benchmarking helped identify the optimal number of parallel tasks by balancing GPU, CPU, and memory usage without overloading any of the resources.

Here are some results of our benchmarks using our 30s reference video :

5 Parallel videos :

- A g4dn.xlarge instance takes 130s per batch, so the average compute time is 26s per video.

- g4dn.2xlarge -> 73s -> 14.6s/video

- g4dn.4xlarge -> 60s -> 12s/video

- g4dn.8xlarge -> 56s -> 11.2s/video

10 Parallel videos :

- g4dn.xlarge -> 263s -> 26.3s/video

- g4dn.2xlarge -> 138s -> 13.8s/video

- g4dn.4xlarge -> 92s -> 9.2s/video

- g4dn.8xlarge -> 87s -> 8.7s/video

Note: CPU-only instance c5.2xlarge handled only 2 videos in 186s (93s/video), demonstrating that GPU compute is essential.

Tests with different AWS EC2 instance types showed that processing 10 videos in parallel on g4dn.4xlarge instances provided the best trade-off between speed and cost. While larger instances like g4dn.8xlarge achieved slightly better performance, the additional cost did not justify the marginal gain.

Audio Conversation

This pipeline enables a turn-by-turn exchange of audio messages between the user and Santa Claus. When an audio file is received from the user, it is converted into text using the Speech-to-Text service. This allows us to generate a text version of the user’s message that can be processed by the LLM agent.

The user’s message is then sent to the LLM, along with a system instruction directing the model to behave as Santa Claus, as well as the conversation history to ensure dialogue continuity. This enables the model to generate a relevant and coherent response in the context of the ongoing conversation.

Once the response is formulated, it is converted back into speech using the Text-to-Speech service, employing a voice designed to match Santa Claus.

Christmas tales

For this feature, users provide three words via text. These words are moderated and compiled into an LLM prompt to generate a custom Christmas story. The result is then voiced using text-to-speech, creating the impression of Santa narrating.

Prompt Engineering and Model Selection

During the development phase, especially when using the LangChain service, we monitored interactions between testers and the LLM agents to identify any undesirable or imprecise behaviors.

This process allowed us to refine our prompts and replay past interactions to ensure the agents responded in a way that met the product’s requirements. These test samples also helped us evaluate costs and select the model that offered the best quality-to-price ratio, ensuring a balance between performance and efficiency.

CI/CD & IAC

As is customary at makemepulse, this project was developed using continuous integration and continuous delivery across multiple environments. Both the application code and the infrastructure are stored and versioned in a GitHub repository.

A GitHub Actions pipeline automatically handles all the necessary steps for deployment every time changes are made to the branches :

- Building the Node.js application.

- Building the Python video server Docker container.

- Updating the infrastructure using AWS CDK.

- Deploying the Node.js application to AWS Lambda.

- Deploying the video server to AWS ECR.

- Refreshing the EC2 instances.

- Invalidating the caches.

This setup ensures that the latest version of the application is always available to our QA team and clients without requiring additional attention from developers. If an issue occurs during deployment, developers are notified and can quickly review logs to address the problem.

Managing the infrastructure as code allows us to take advantage of the same benefits, simplifying the configuration of the various AWS components we use. It also enables us to quickly deploy new environments with different configurations with minimal effort and a reduced risk of mistakes.

For example, during the project, we tested using EC2 g6 instances in a different AWS region. In just a few minutes, the entire infrastructure and application were up and running in the new region.

Quality of Service

The application depends on several internal and external services. If any of these services become unavailable or experience increased response times, the entire application may be affected. Therefore, it is crucial to monitor each component of the application to quickly and accurately identify any issues.

To achieve this, we collect latency metrics for each stage of the pipeline using AWS CloudWatch. These metrics are displayed on dashboards for real-time monitoring. These data points were also essential during development, allowing us to confirm the impact of code optimizations, select external services, adjust auto-scaling strategies, and conduct load testing. During the live phase, alarm alerts were set up to notify us in real-time of any abnormal values, ensuring continuous 24/7 monitoring without the need for constant human supervision.

Conclusion

The microservices architecture, whether for internal or external components, is a highly suitable solution for this type of application.

It allowed us to easily integrate applications written in different languages (Node.js/Python) and run them in various environments (serverless/virtual server). It also enabled cost savings, as only tasks requiring GPU processing are executed in GPU-enabled environments. Additionally, load management became smoother, particularly with the use of queues that can throttle processing during periods of overload.

On the LLM side, the technical integration was straightforward; however, the real challenge lay in prompt engineering. Several iterations are necessary, alternating between writing and testing, to achieve the desired responses. Adding backend logic allowed us to guide the agent with high precision during key steps in user interactions, such as ensuring it says 'Goodbye' at the right moment, like now...